Technologies: Python, MSSQL, Excel, Windows Service

Migrate to new Software Creates a Need for Websites Scraping Solutions

The problem lies within the fact that often times, a company wants to maintain information that is not readily available in a database or an API. Instead, the information is partitioned across hundreds, if not thousands of different sections within the framework of the prior software.

Our development team has witnessed this issue on numerous occasions, which has made them perfectly suited to develop solutions allowing companies to hit the ground running when faced with new software.

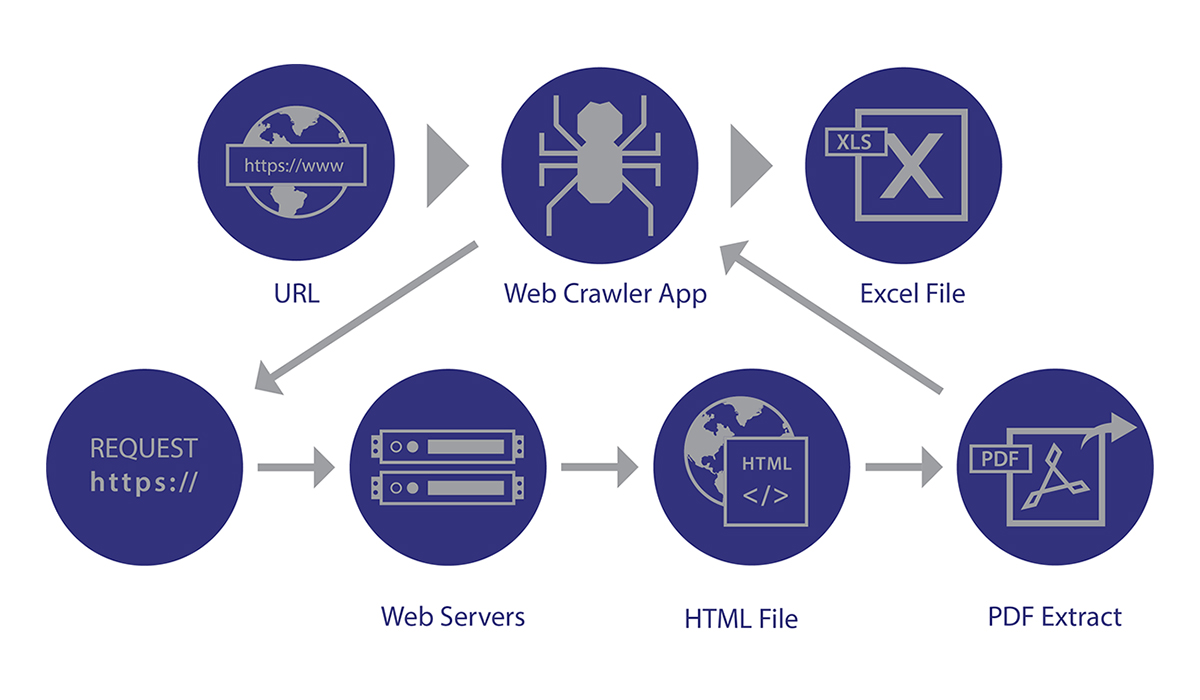

Developers can Scrape Website Content, Download files and Store Information in a Database

Real World Web Scraping Example

Website Scraping Saved Time and Money by Efficiently Grabbing the Data

- Login to a secure website

- Navigate through thousands of people records

- Check for a particular file

- Download the file with a specific naming convention

- Store a record of the data into an Excel sheet

The company now has thousands of files stored in one location, with an accompanying spreadsheet making the traversal of the document much easier for the user.

Capitol Tech Solutions team of developers has the expertise to grab any data off of the web through custom website scraping scripts. Contact us today and save hundreds of hours of manual labor migrating your data.

Software Development Articles You May Find Helpful

A guide to kickstart your next Capitol Tech Solutions Software Development Project

Everything you need to know about preparing a Scope of Work to get your next software development project underwayAt Capitol Tech Solutions, we use...

CTS Team Wins Top Mobile App Development Award

We are proud to announce that Good Firms Directory lists Capitol Tech Solutions as Top Mobile App Development Companies in 2020. Good Firms is a...

Capitol Tech Solutions Named on Two Separate Top Company Lists by Sacramento Business Journal

Every year, the Sacramento Business Journal publishes their annual “Book of Lists,” naming top local companies in a variety of individual business...

Do you want to improve the speed of your website and increase your user traffic?